A Guide to AI Agents for Data Engineers

What are AI Agents

AI Agents are applications that use language models to reason about decisions and take sensible actions to answer a user or a machine’s prompt or question. AI agents are intended to perform tasks that require some reasoning that would otherwise be difficult to implement in code. With AI agents, tasks can be assigned to one or split between multiple agents working together as a team, in a chain.

You can think of this agentic revolution as akin to the HTML website revolution of the 1990’s. In the 90’s everyone built websites to allow users to interact with their brand. I remember building websites for my many side projects, my dad, my friends and eventually as a side hustle making a couple hundred dollars a week. Over time, these websites became more capable adding CSS styling, AJAX requests, audio/video and much more. Today websites are full blown applications accessible from anywhere. Agents pick up where websites left off, adding LLMs, giving them the ability to reason and make sensible decisions. These intelligent applications are revolutionizing how we do everything, and they are getting scary good, fast!

How do AI Agents work

Agents are given a role, background and objectives to complete. For example, you can build an agent that is a Data Quality Engineer. This engineer is experienced in identifying duplication in columnar data. Their objective or goal is to incrementally query the product_sko table in the Snowflake data warehouse and flag any row that is a duplicate. They will flag the row by inserting “true” in the is_duplicate column.

Agents can connect to tools that allow them to perform tasks based on decisions they make, using an LLM model you choose. These tools can be external APIs, databases, custom application logic or really anything. Agents use these tools to collect information that would allow them to complete their defined objective. Kind of like you and me 🤷♂️

Agents connect to each other in chains to allow complex workflows to be executed involving multiple decisions and actions. For example, consider a workflow that allows users to onboard new datasets by themselves. The first agent will accept a user prompt that describes the new dataset and provides a way to access it. The agent’s LLM will parse the context and add it to the data catalog making future discovery and understanding easy. It then asks another agent to retrieve the dataset, extract its schema, design a basic data model, infer meaning of each column, create the new tables in the warehouse and update the catalog with the additional metadata. Lastly, a third agent is called to inspect the source data for errors, break it up into a simple data model and load it into the warehouse tables.

Agents can be fully developed, hosted and deployed by a provider’s SaaS platform, like CrewAI or self-managed and deployed on your own compute infrastructure. Since they are often Python or Javascript based applications they can be deployed and managed like any other application.

What are the similarities to data engineering

Agents and agent chains are like Airflow tasks and DAGs, it’s really that simple 🙄

Short rant: The fancy AI agent dev tools you often see promoted like CrewAI, n8n, Make, LangGraph and other no-code/low-code solutions produce Python functions that are orchestrated to run as DAGs. Everything they do, you can do with Airflow and Python (or your choice of language). You don’t need to learn or deploy a new toolchain to build or manage AI agents. Don’t get swept up in the dev-tool hype.

There are technically two types of agents - AI and non-AI based agents.

The non-AI agent implementation is a piece of code designed to complete a basic task, like querying a database or calling an external API or parsing a file. As data engineers we do this all day, every day. As you’ll in a bit, these will become “Tools”.

The AI agent implementation is a piece of code that receives a prompt, often times as text but can also be image, audio or video, and feeds it into an LLM, by API call, to make a decision or produce an answer. This is what everyone is getting excited about and could be new and scary to some data engineers. We’ll unpack this in more detail and you’ll see why there is more opportunity here than scary demons.

Both of these types of agents can be chained together in a DAG and orchestrated to run on a schedule or be triggered by an external event, like a user prompt. Context or state can be shared between agents, feeding the output of one agent as an input to the next.

DAGs are deployed and agent tasks are executed on standard compute as you would any other data engineering DAG. These is typically little need for your own GPU clusters since the LLM work is handled by a provider like OpenAI or AWS Bedrock, unless you’re actually training and hosting your own models. Monitoring and troubleshooting is closer to how SWE’s manage their application code but often not too different from how DE’s manage data processing code, as best practices been converging over the years.

What are the differences from data engineering

Although there are many similarities between agents and common DE tasks, they begin to differ as AI is introduced to make decisions.

In 80% of the cases, DEs deal with workflows that have a very well defined logic and doesn’t require any dynamic reasoning - read a CSV, extract the schema, run some quality tests and load it into the warehouse table. These don’t need AI and should avoid incorporating it to keep costs, complexity and latency low.

In the remaining 20% (+/-) of cases, engineers need to write more complex logic. Robust code that defines the task logic, accounting for any decisions that need to be made along every possible execution path. The more complex the task, containing a wide range of input and output variations, the decision logic becomes exponentially difficult to implement. This often requires senior+ level engineers with years of experience and even then the resulting code is fragile and requires constant adjustments to address scale and new variables.

By using AI, the complex logic is described in plain English and the LLM is responsible for making the hard decisions based on dynamically changing inputs. This means that engineers now need to wrestle with:

Being able to clearly describe the solution’s logic in words, not code

Giving up some control over how decisions are derived

Inconsistencies in behavior and performance

Today’s AI models are far from perfect. They still need a lot of help, additional context and guardrails to make sure they produce the results you’d expect, consistently. This requires a fair bit of custom logic, iteration and trial and error to get right. A well known struggle for data engineers, but this time with more English and less code.

Note for new data engineers: get really good at prompt + model evaluation and tuning. Each model works differently and when designing an AI agent, ensuring consistent and accurate results is key to success. As you enter the DE workforce, know the differences between models and have practice in evaluating prompts vs. output, it will come in handy for potential employers.

Over time, as these AI models mature, their ability to reason and make decisions will also improve, reducing the amount of context and guardrails you need to implement. This eliminates a lot of the word-smithing, language gymnastics and custom code you’ll need to employ to get things working right.

AI concepts data engineers need to know

RAG systems

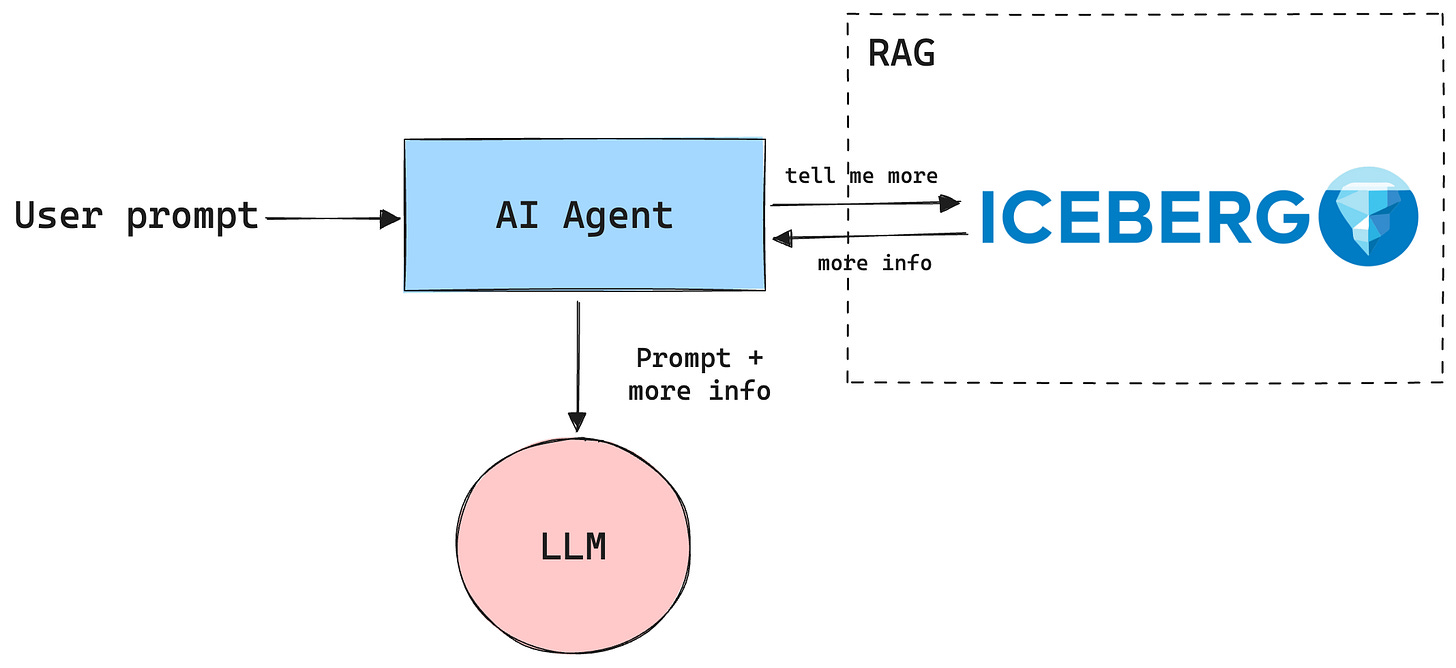

Retrieval-Augmented Generation is a fancy way of saying “look-up”. Essentially, a RAG system is a solution to look up additional information, from an operational DB, warehouse, API or whatever, to enrich a prompt before it is sent to an LLM. In popular AI literature, RAGs are often built using vector stores and embedding generation. But these aren’t always necessary. It can simply be built with just a SQL query to your lakehouse or an API call to your Elasticsearch cluster.

Vector stores are search-optimized databases for vector data. Vectors are populated by ingesting text (or images, videos and audio) and running it through embedding models that output an array of numbers representing the input. A vector store, persists and indexes these vectors making it fast to perform similarity searches - i.e looking up the question from the prompt against a list of knowledge-base article summaries.

There are pros and cons to implementing vector stores in your architecture which I won’t get into here, however as a data engineer being asked to build a RAG, keep it simple and try to leverage your existing infrastructure and tools to reduce cost and complexity. Remember, an effective RAG can be as simple as a SQL query to your data lakehouse or warehouse.

Multi-modal data processing

Multi-modal is another fancy term for saying “different types” of data. Yes, AI should probably get its own urban dictionary 🙄

This is one area that I feel is very different for data engineers. Traditionally DE’s worked with structured and semi-structured textual data that fit into explainable schemas and can be formed into data models that make business sense. As companies branch into more use cases, engineers need to handle unstructured or binary data like PDFs, images, video and audio. This type of data doesn’t currently work well with the incumbent data engineering tools we use every day, nor does it have any structure we can easily reason about.

AI, however, makes working with unstructured data easier. It allows engineers to extract meaning without the need to implement complex and costly ingestion, processing and inference pipelines. Simply point an LLM to a video and it will tell you almost everything you want to know about it. This information can be modeled and stored in your lakehouse for future analytics and enrichment of AI agent flows.

For current and new data engineers, I highly encourage you to spend more time learning the tools and techniques used to process and analyze unstructured data. Although we commonly refer to images and video, but this also applies to PDFs and other documents containing business and legal contracts, SOWs, financial records and much more. As your company tries to leverage all of its data, unstructured sources are becoming a priority and you should be ready.

Vector embeddings

Refers to an approach for converting and representing textual, visual or audio information as an array of numbers that captures their meaning in context. This array of numbers is how LLMs and other ML models efficiently interact with information. For example, the numbers (vector) representing (embedding) the word “dog” in a sentence will be similar to the numbers (vector) identifying an image of a dog. So when an AI agent wants to find all images of dogs, it can do a vector similarity search using the “dog” vector on a vector database holding metadata and vector representations of lots of images - as part of a RAG system.

You’re probably thinking, if I already store metadata about these images, can’t I just SELECT * FROM image_metadata_tbl WHERE type="dog" ? Of course you can and this is why vector databases aren’t always required. So don’t just blindly follow the common wisdom, see if it’s actually useful for your use case.

Different embedding models generate different vectors. For example, the OpenAI text-embedding-3-small produces a vector with 1536 floating point numbers and text-embedding-3-large produces a vector with 3072 floating point numbers. The bigger the vector, often, the more detail it can capture. The bigger the embedding model the more processing power it requires resulting in higher latency and cost to generate and store. There are optimizations of course, but this is where you need to dive into the details and understand the impact of each change you intend to make.

There are many different embedding models that optimize for different use cases. The primary use cases for embeddings is in similarity search. If that’s not part of your workflow or you have other means to find information, you can skip it altogether. However as you continue to add more AI components to your workflow and agents, you will need to work with embedding vectors at some point.

As a data engineer, I highly encourage you to learn more about vector embeddings and vector databases as it will eventually come into your purview.

Task and agent orchestration

As I already mentioned throughout this post, a big part of AI agents and agents in general is the orchestration of tasks comprising a workflow. There are many up and coming dev-tools and solutions designed to “simplify” the process of chaining and orchestrating tasks required to implement an agent. These are nothing more than fancy Airflow alternatives. Yes they offer pre-built templates and simple to use UI for common agent workflows, but are not required for you to implement or use an agent.

Furthermore, these tools aren’t designed for or mature enough to handle the needs an enterprise-grade agent. For example, they may implement “agent memory” that’s used to share state between agents and tasks. However, this memory isn’t properly persisted or may leverage an in-memory DB that won’t scale beyond a single compute node.

As a data engineer, you already have a bag of mature, scalable and resilient tools for implementing task orchestration, scheduling and execution. Use it.

Leveraging your existing tools will let you get to production grade agent deployments quicker and will allow you to properly monitor and maintain them as usage grows. Spend time understanding how agents can pass information between tasks in a resilient manner. Develop building-block tasks that make it easy to plug in components that implement common actions like SQL queries to your lakehouse, reporting logs, tracking ML interaction history, and more. Build out your ecosystem to make building agents simple, don’t just rely on the hot-tool-of-the-month.

Prompt engineering

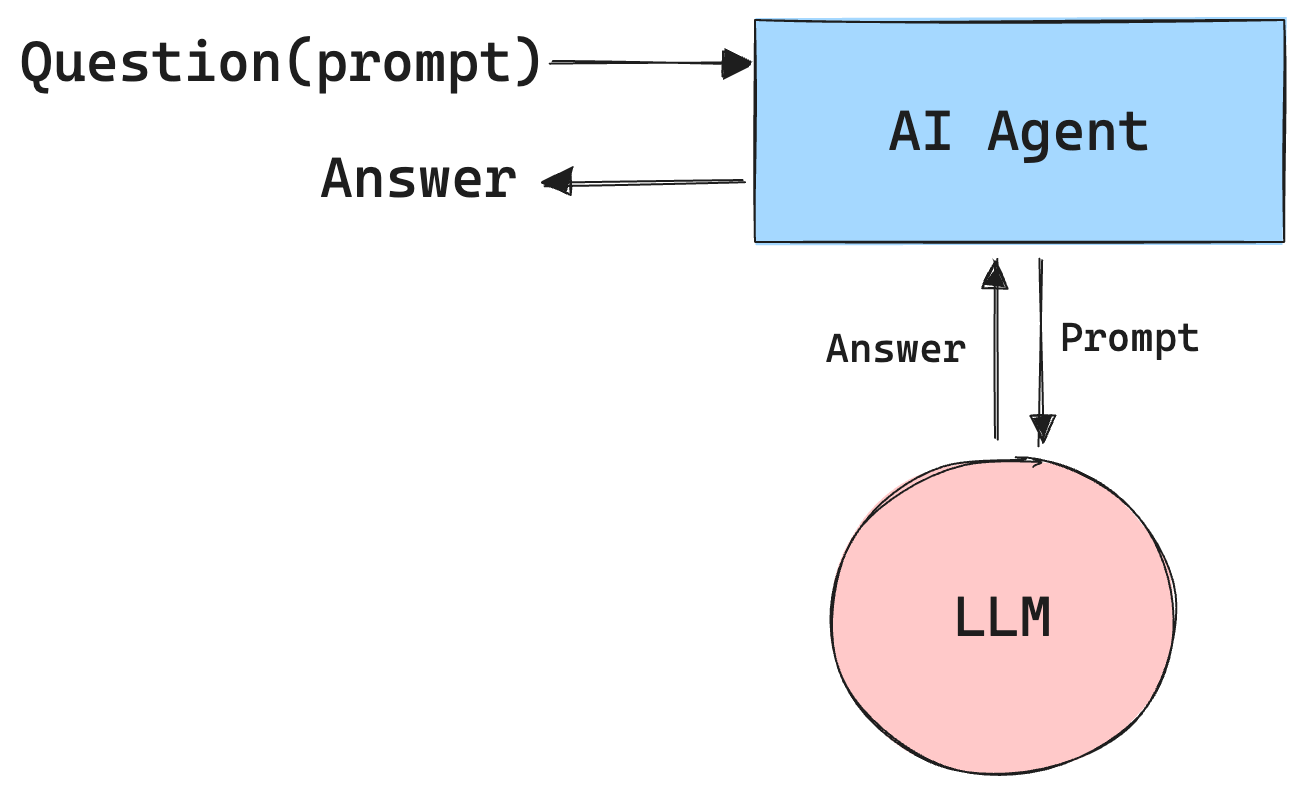

Prompts are text instructions users or machines pass to an LLM to instruct it of a task to complete. Most commonly we talk about user prompts, like the questions you ask ChatGPT. A simple flow would look like this:

As shown above, an AI agent is triggered by a user prompt asking it to perform a task or provide an answer to a specific question. For tasks that LLM can answer directly, there is little for you to do.

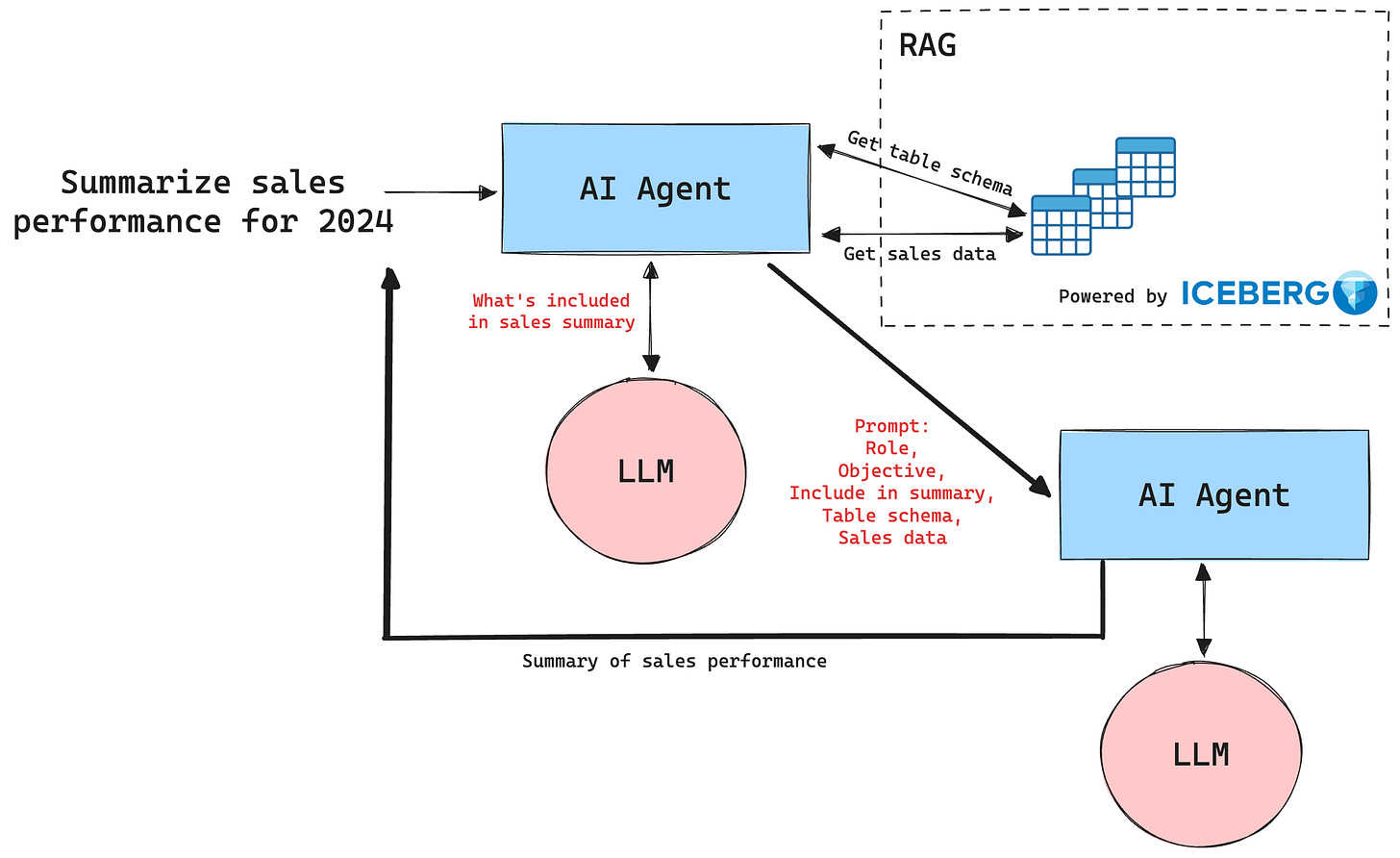

However, for multi-step or enterprise-specific tasks, those that require additional information only available to your company, you are required to enrich the original user prompt with proprietary context (via RAG). Furthermore, as instructions are passed from one AI agent to another, those prompts must also be carefully designed to produce the desired results.

As you can see in the example diagram above, the first prompt is very different than the original user question. It attempts to figure out what additional information it needs to generate for the answer to the user’s prompt to be complete. From there it looks up sales records in the Iceberg-based lakehouse and uses all of this information to construct a new prompt that is sent to the second AI agent. The second prompt is much more involved. It includes information such as a role, like “you are a sales analyst responsible for end of year review of sales performance”. An objective, like “you need to generate a comprehensive performance analysis of all sales transactions closed and won in calendar year 2024….”. It also includes the information gathered by the first agent like list of other items to include in a sales summary, the schema of our sales tables so it can refer to specific metrics by name and the actual sales data to aggregate and summarize results.

All of this is actually more difficult then it seems and requires deep understanding of how to engineer, test and manipulate text prompts that gets the LLMs at each step to behave in a way that meets your needs.

As a data engineer, this is a brand new space for professional growth. Spend time understanding prompt best practices for the LLMs you plan to use. There are nuances between them. Some key things to get good at:

Being specific and concrete as possible with your language.

Splitting it up, don’t try to do too much in a single prompt.

Testing prompts with co-workers, if they don’t get it, the LLM won’t either.

Asking the LLM what isn’t clear about your prompt, not perfect, but helpful.

Evaluating results to your prompts and fine tuning your language.

Tool Use for custom function calling

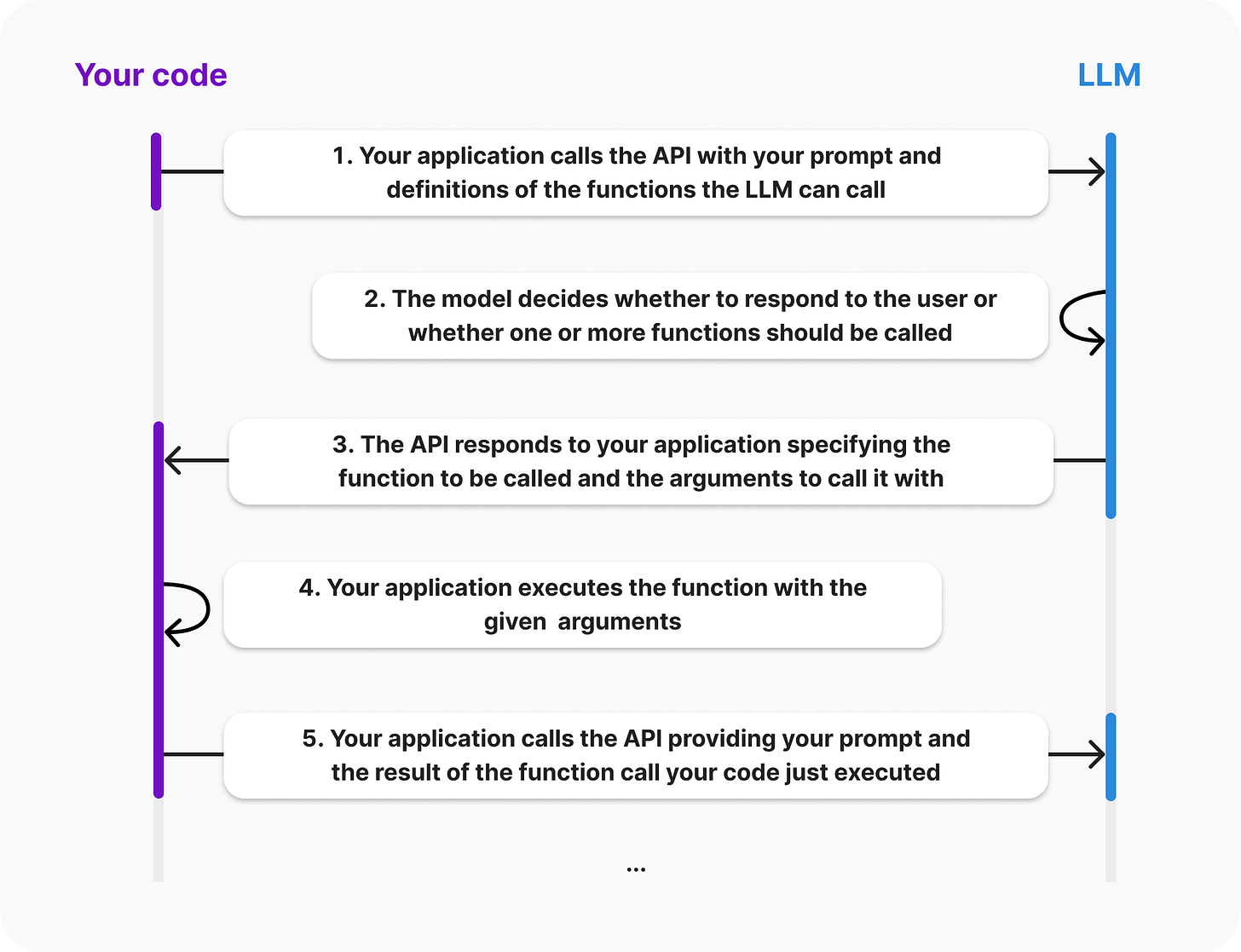

Tool Use or function calling is a fairly new capability with popular LLMs that allows you to suggest custom functions the LLM can choose to use when responding to a prompt. You can think of it as Remote Procedure Calls (RPC), however the LLM does not actually call the function. Instead it tells the client application (your AI agent) which function it wants to call with a set of parameters and it’s up to you to call it and return the results to the model.

Here is a simple flow diagram directly from the OpenAI tool calling documentation:

Tools are declared in the API call to the LLM along with the user prompt or a custom prompt you’d generate when prompting other agents. Each tool must also define a JSON schema for the parameters it will accept. Furthermore, you need to clearly and descriptively explain what the tool is for, when and how to use it. The LLM will use this information to decide if and when to use your tool to answer the prompt (there is a way to force the LLM to always use the tool).

Tool execution is handled by your agent code. This is a chance for you to validate the input parameters and decide how you want to handle the request - run it locally or fork it out an external system.

As a data engineer, you can think of Tool Use as a way for you to define a set of building block capabilities, as callable functions, that you can reuse in your agents. Furthermore, if you’re supporting other users building their own AI agents on your platform using data you manage, offering these canned functions provides a standard way to interact with data services. For example, you may create a Tool Use for execution SQL queries against tables in your Iceberg lakehouse. Users don’t need to know anything about the underlying store, they simply provide a SQL query as a parameter and your code executes it using the preferred SQL tool, say Amazon Athena. Within your tool’s code you can manage permissions, data masking and any failure scenarios.

Like any external call or process, Tool Use adds latency and cost both to the model execution and your data infrastructure. Tool Use generates additional prompt text to guide the LLM in choosing it. This text or tokens can add up quickly resulting in higher costs.

Tool Use is still in beta for most LLMs but it’s a very interesting idea that I would expect will become more powerful with time. This is opportunity for you, the data engineer, to define how LLMs and AI agents interact with your data platform.

AI Agents are changing data engineering as we know it

Ever since ChatGPT was released to the public at the end of 2022, all we’ve been hearing is how AI will take away our jobs. Lets be honest, some jobs will be lost, but most will just be different. There is so much AI can do to make our jobs better, not just easier.

There are two primary areas where AI and agents are beneficial:

First, AI enables us to automate tasks with large surface area, like testing and quality validation. Consider an AI agent that every so often executes a set of queries to test different aspect of your data and ensure it meets a certain quality bar. It uses AI to decide when to run, on what portions of the data and what test queries to generate. At the end of each run it emails out a report and updates queryable health tables with new metrics. There is a lot of potential here for engineers to improve and automate processes that require lots of manual work.

Second, AI enables us to better adapt to the dynamic and fluid nature of data. Personally, I’m very excited about this area. For example, we can build an AI agent that monitors your Iceberg lake tables using Iceberg metadata tables combined with query engine metric reporting to decide when and how to optimize Iceberg tables, automatically. Today, much of that knowledge is captured in best practices, baked in your optimization tool of choice and refined over years of real-world experience. With AI, that learning can be accelerated significantly delivering much better performance for cheaper. Keep an eye on this space!

For senior DEs, AI and agents should be exciting. More advance tools with an opportunity to optimize and improve what they are doing today. For junior DEs and new folks entering the field, AI and agents can provide an opportunity to lead a new way of thinking and doing. Otherwise, entry level jobs will be harder to find as AI will eventually automate them. For DE leaders and managers, AI and agents give you an opportunity to become an impactful profit center and to get out of the endless support role most data organizations deal with today. Bring SWE skills to your team, develop agents and tools for your users to leverage. Support their desire to implement AI with good quality data and your organization will flourish.

I encourage you to dive deep and understand the AI agent approach, its capabilities and limitations, become well educated in this space. Don’t ignore it.

Good luck!

Glad it was helpful. Do you feel something was missing or could be explained better?

Excellent write-up on bringing DE+AI Agent roles together for the Data Engineer, explaining all aspects of LLMs so that even a caveman could understand it. 👏🙌🔥